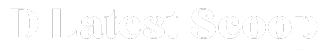

When Apple unveiled new AI features this week, much of the coverage was focused on the company's partnership with OpenAI to bring ChatGPT to millions of iPhones — a position Google has tried to negotiate for itself.

But Apple and Google have been working together behind the scenes for months, with Google giving Apple access to its data centers to train the iPhone maker's new AI models.

Apple has long relied on Google and Amazon cloud services to store the data in its products. For example, when Apple device users back up their devices with iCloud, that data is often stored in Google's data centers. It's a deal neither company talks about much, but it gives Apple access to thousands of Google machines, which helps it provide many of the software features that iPhone users love and rely on.

TPU is amazing

To train the AI models that power the iPhone maker's new Apple Intelligence, the company asked for additional access to Google's Tensor Processing Units for training. TPUs are chips designed specifically for AI, which Google rents through its cloud service as an alternative to Nvidia GPUs.

Apple's request caused confusion at Google in April, when Googlers realized they had technical issues that could prevent them from delivering what Apple wanted in a timely manner.

The incident was dubbed “OMG” internally, a Google term for a one-off emergency that doesn't warrant an emergency code, and a strategy meeting was held within Google, according to a person with direct knowledge of the incident.

“Bigfoot”

The team delivered the work for Apple after several long days, according to people familiar with the matter. But it came at a potentially dangerous time for the company, which has earned the nickname “Bigfoot” among Google Cloud employees for its extensive use of Google's data centers.

Spokespeople for Google and Apple did not respond to requests for comment.

The deal highlights just how far behind Apple still is in the race for generative AI: Most of the smart features of AI models must be processed, at least in part, in giant, energy-hungry data centers that companies like Microsoft, Google and Amazon have spent years building. As a result, Apple is increasingly having to rely on these rivals as it treads waters in the AI race.

As eagle-eyed Apple watchers have pointed out, Apple's technical documentation suggests a partnership with Google, stating that the company's AI models were trained using a combination of methods “including TPUs and both cloud and on-premise GPUs.”

When users actually try out Apple's new AI features, much of the work will be done on the device itself, with more intensive tasks being handed off to specialized data centers running what Apple says are new Apple-designed silicon. It's unclear where these servers are actually located.

An unexpected partnership

But Apple is facing a new reality as cloud computing and chips for training AI models become hot commodities, forcing it to partner with competitors.

For example, Apple's deal with OpenAI will give users access to a more advanced chatbot in the form of ChatGPT than Apple can offer, which is also a boon for OpenAI, as it gives them new access to Apple's massive user base.

The AI wars are forcing tech companies to forge these crucial, and sometimes unexpected, relationships. On Tuesday, Microsoft and Oracle announced a new deal that will give Microsoft access to Oracle cloud servers to run some OpenAI workloads. Until now, OpenAI ran only on Microsoft's servers.

Bloomberg previously reported that Google and Apple were also in talks to bring Google's Gemini AI to iOS devices, though no deal has been finalized so far, but that doesn't mean it won't happen.

In an interview after the keynote, Apple executive Craig Federighi made it clear that Apple is actively looking to expand its business with AI partners, even going so far as to mention Google.

“Ultimately, we want to let users choose the model they want, which may be Google Gemini in the future,” he said. “Nothing to announce at this time.”