Online scammers are using AI voice cloning technology to make it look like celebrities like Steve Harvey and Taylor Swift are encouraging fans to fall for health benefits scams on YouTube. 404 Media first reported on the trend this week. These are just the latest examples of scammers taking advantage of increasingly accessible generative AI tools to target economically impoverished communities and impersonate celebrities for quick financial gain. Part of it.

404 Media was contacted by a tipster who pointed out that he has published over 1,600 videos on YouTube in which deepfaked celebrity voices promote scams as well as ordinary people. Many of these videos remain active as of this writing and have reportedly racked up 195 million views. These videos appear to violate several of YouTube's policies, particularly those regarding misrepresentation, spam, and deceptive conduct. YouTube did not immediately respond pop science Request for comments.

How does fraud work?

Scammers try to fool viewers using chopped up clips of celebrities and voiceovers created with AI tools that mimic the celebrities' own voices. Steve Harvey, Oprah, Taylor Swift, podcaster Joe Rogan and comedian Kevin Hart have all released deepfaked versions of their voices to promote scams. Some videos don't even use celebrity deepfakes at all, instead using a cast of real humans over and over again, seemingly touting different variations of a similar story. The videos are often posted by his YouTube accounts with misleading names such as “USReliefGuide,” “ReliefConnection,” and “Health Market Navigators.”

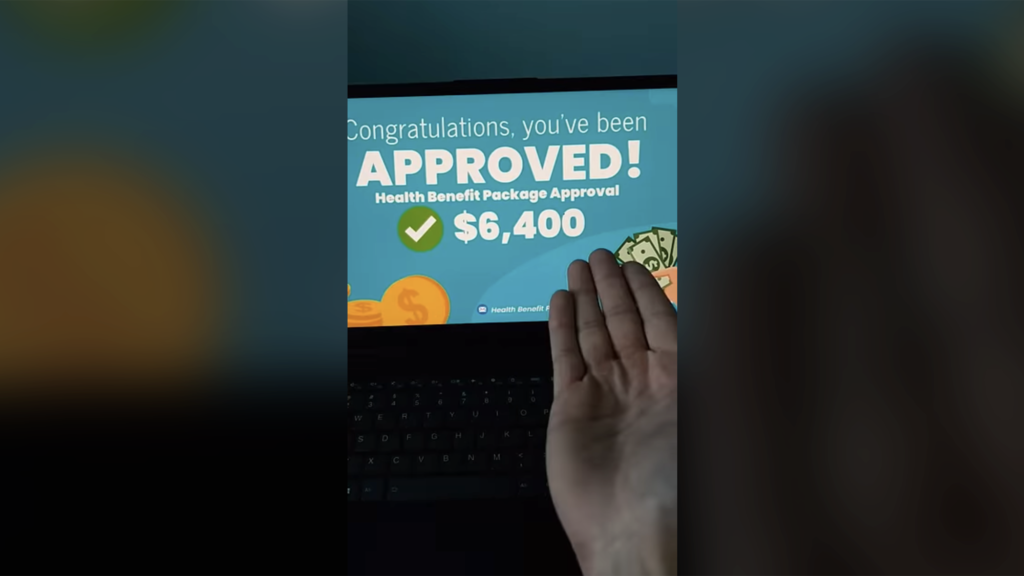

“I’ve been telling you all for months to charge me this $6,400,” deepfake clone attempting to impersonate family feud host Steve Harvey says. “Anyone can get it, even if they're unemployed!'' This video alone, which was still on YouTube at the time of writing, has been viewed over 18 million times.

The exact wording of the scam varies from video to video, but it usually follows a basic template. First, deepfaked celebrities and actors warn viewers about the $6,400 year-end stimulus checks that the U.S. government will provide through “health spending cards.” Celebrity testimonials say anyone can apply for stimulus checks as long as they're not enrolled in Medicare or Medicaid. Viewers are then typically prompted to click on a link to claim the offer. Like many effective scams, this video creates a sense of urgency by trying to convince viewers that the fake deals won't last long.

In reality, victims who click on these links are often redirected to URLs with names like “secretsavingsusa.com” that are not actually affiliated with the U.S. government. PolitiFact reporters called the registration number listed on one of these sites and spoke with an “unidentified agent,” asking about their income, tax status, and date of birth. All sensitive personal data that can be used for identity fraud. In some cases, scammers are said to also ask for your credit card number. The scam appears to use the confusion surrounding the actual government medical tax credit as a hook to reel in victims.

Although many government programs and grants exist to help people in need, a typical insurance claim that offers “free money” from the U.S. government is generally a red flag. These scams can be made even more convincing by reducing the costs associated with generative AI technologies that can create semi-convincing imitations of celebrity voices. The Federal Trade Commission (FTC) warned of this possibility in a blog post last year, citing clear examples of fraudsters using deepfakes and voice clones to commit extortion, financial fraud, and other illegal activities. Ta. In a recent survey, pro swan Last year, it was found that deepfake audio can already fool human listeners by almost 25%.

The FTC declined to comment on this latest round of celebrity deepfake scams.

Affordable and easy-to-use AI technology is driving the rise in celebrity deepfake scams

This is not the first case of deepfake celebrity fraud, and it almost certainly won't be the last. Hollywood legend Tom Hanks recently apologized to his fans on Instagram after a deepfake clone of himself promoting a dental plan scam was discovered. Shortly thereafter, CBS anchor Gail King said scammers were using similar deepfake techniques to make it appear as though she endorsed weight loss products. Recently, scammers combined an AI clone of pop star Taylor Swift's voice with an actual image of her using Le Creuset cookware to trick people into signing up for a kitchenware giveaway. It is reported that he tried to persuade the Fans never received shiny pots and pans.

Lawmakers are scrambling to draft new laws or clarify existing laws to address the growing problem. Several bills, such as the Deepfake Liability Act and the Anti-Fake Act, would give individuals the power to control the digital representation of their likeness. Just this week, a bipartisan group of five members of Congress introduced the Anti-AI Fraud Act, which aims to create a federal framework to protect individuals' rights to digital likeness, with a focus on artists and performers. submitted. Still, it's unclear how likely they are to pass, as new AI bills are rapidly developed and filed in Congress.

Updated 01/11/23 8:49am: A YouTube spokesperson responded: pop science “We are constantly working to strengthen our enforcement systems to stay ahead of the latest trends and fraud techniques and respond quickly to new threats. We review shared videos and ads. We have already removed several videos and ads that violate our policies and are taking appropriate action against the accounts involved.”